Why pay if you can also run it for free locally? This question clearly shows that i have finally finished my Dutch integration process. 😊 Here is will focus on the tools themselves, not in the models.

My 2 finalists

What do they have both in common?

- Local model support: Both prioritize privacy by enabling offline usage of AI models.

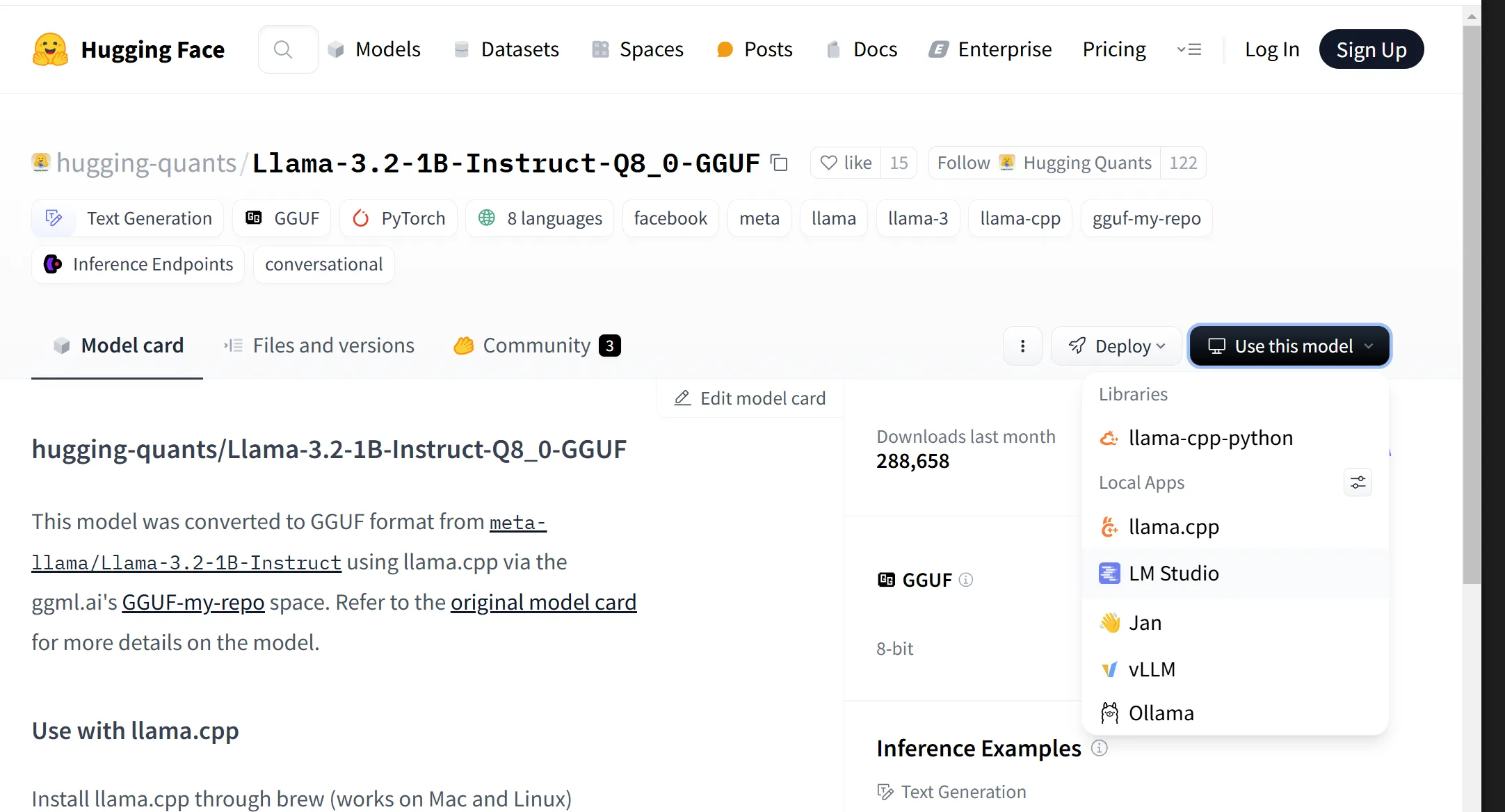

- Tight integration with Hugging Face (in just 2 clicks you can start using a model from Hugging Face into this tools):

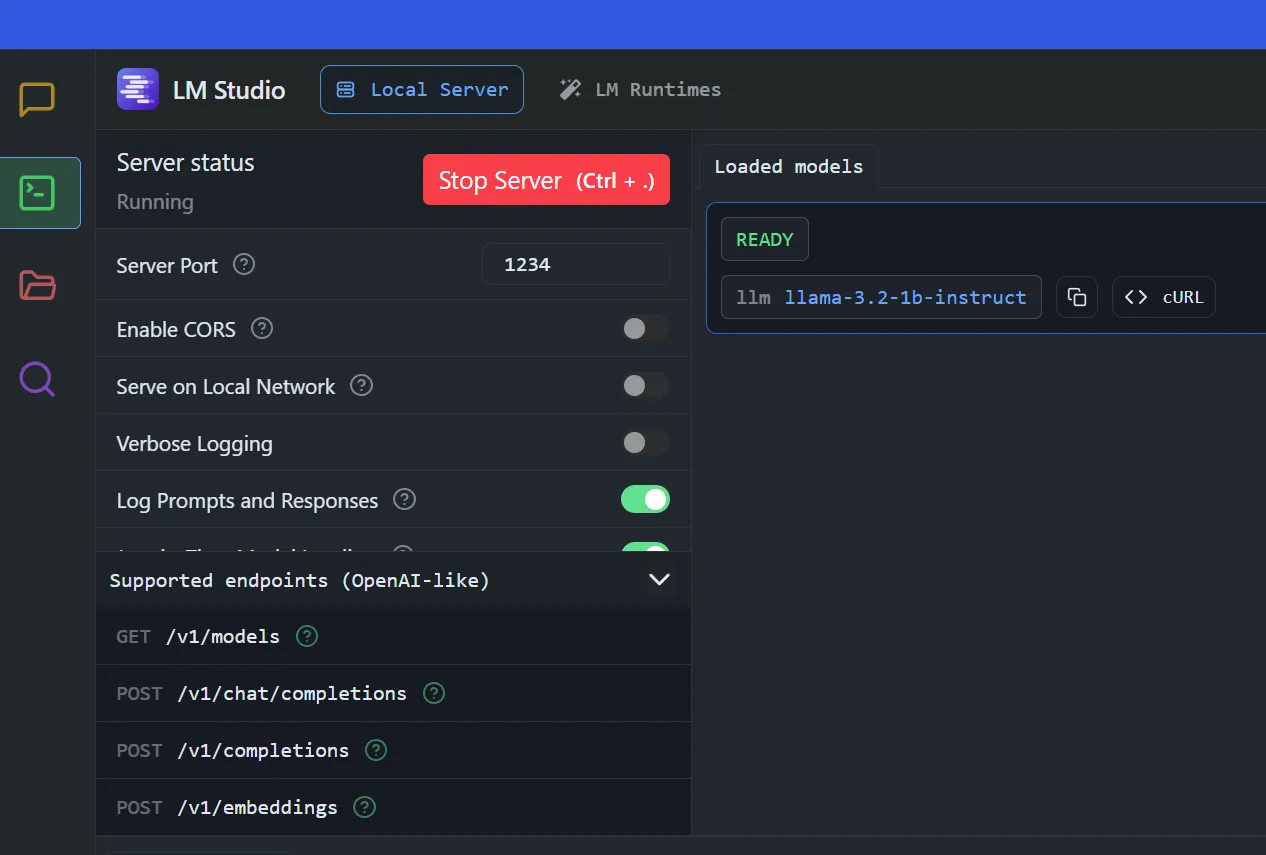

- Both expose models via http (server mode)

- They both also allow you to change binding to expose to LAN network. (important if you are using WSL and hosting your tool in your main windows, it will not be localhost anymore)

LM Studio

To me LM Studio is the ‘fancy’ one , showing amazing UI/UX experience. If you love to customize via UI’s this is the one for you.

My personal short list:

- The best UI/UX experience that I have seen so far.

- Very clear control of model parameters.

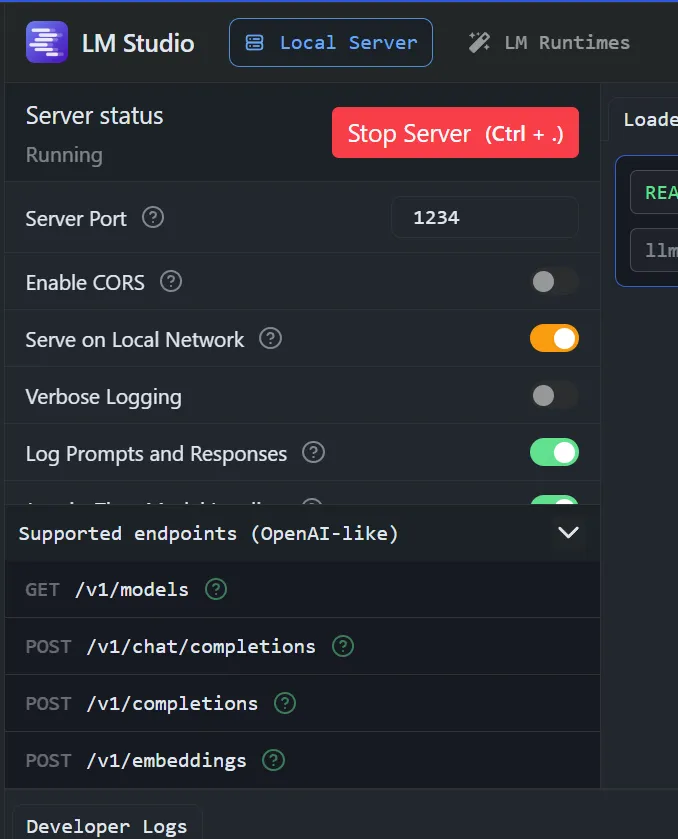

- You can use it as a server. (Not just chat, you can interact as DEV)

- Compatible with OpenAI API. (so you can re-use your same code for DEV/production)

- easy support for serving on your LAN network

Some screenshots to see the tool in action

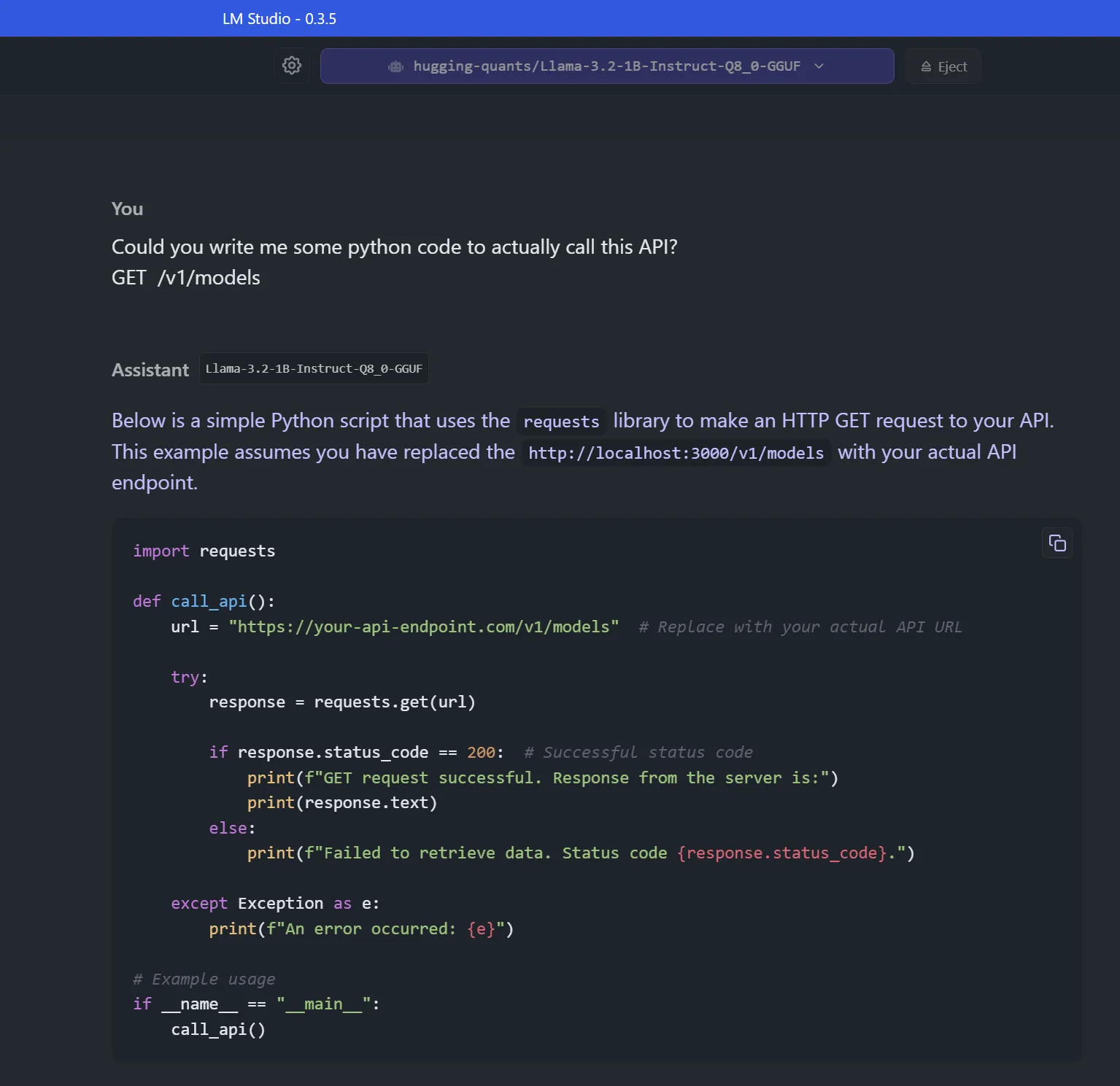

Example: just asking for python API call (super simple,no challenge):

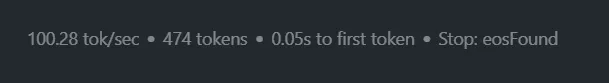

the reaction was amazingly fast:

The UI/UX is just amazing:

Ollama

Ollama is, in my humble opinion, the simplest and if you prefer cmd line (versus UI) this might be for you.

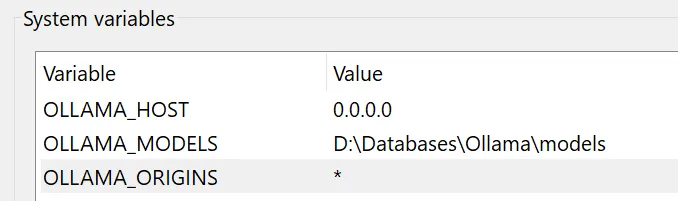

Ollama settings (via env vars)

changing model location: In my case, i have a pretty small c: drive so it’s wise to be sure that models can be stored on a separate drive. Was possible but not so easy as in the UI from LM Studio, but there are ENV variables for each topic.

Exposing OLLAMA to your network

This is very useful if you use both windows and WSL. WSL v2 actually will not allow you to communicate to the host via localhost ( 127.0.0.1), so you need to make this change in your ENV variables in windows (if OLLAMA is installed there off course). So the OLLAMA_HOSTS and the OLLAMA_ORIGINS are needed for allowing this access.

Note: you need to restart the win app for this to take effect and you will probably get the windows firewall dialog alter after this change, so you will need to “allow access” if requested.

Using Ollama

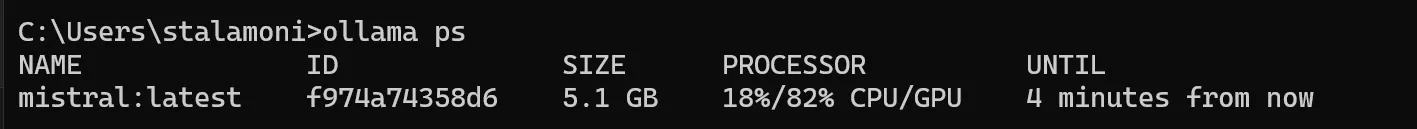

If you want to check if something is running you can issue the ollama ps command to see model activity:

References

Want to know more?

Book a meeting with me via Calendly or contact us!