Semantic Search is a powerful concept built on two foundational AI techniques: Embeddings and Vector Search. This article provides a beginner-level introduction to these principles. Embeddings are numerical representations of text that capture semantic meaning. Semantic Search also plays a key role in Retrieval-Augmented Generation (RAG) systems, often referred to as the “find relevant documents” step. This step retrieves context to support subsequent tasks like LLM-powered chat or Q&A.

What Problem Are We Trying to Solve?

Traditional search techniques like Keyword search, Soundex, and Regex had significant limitations: false positives, lack of effective ranking, poor language support, and no handling for synonyms. Semantic Search takes a different approach, solving these issues by focusing on meaning rather than exact keywords.

How Do Embeddings Solve This Problem?

Text embedding is the process of converting text into a numeric vector. These vectors provide a condensed and mathematically efficient way to represent and work with text, reducing data size and making indexing much more efficient.

Embeddings allow us to rethink search by focusing on concepts rather than specific keywords. You can search by typing a concept freely, as the input (search text) is also converted to a vector.

With vectors, we can apply advanced techniques to find similarities between documents. By creating a vector representation of the search input and comparing it to other document vectors, we enable “semantic search”.

Considerations

When building a semantic search solution, consider the following:

Design the Flow

Designing the flow is an important part of the process. It goes beyond the scope of this article but can be quite challenging. Consider questions like: When and how often does the data change? Where should the embedding code be written? How often should embeddings be generated? What is an acceptable delay? (These are typically questions that a data engineer asks when setting up the data pipeline to update the vector database.)

Selecting an Embedding Model

Several factors affect this process:

- Language: Is your text only in English, or is it multilingual?

- Dimensions: The model determines the vector dimensions, which needs to be considered due to factors explained below.

- Deployment: Where will you run this? Locally or with an LLM provider?

In most example articles and references, text-embedding-ada-002 is often cited as the best model, but this changes quickly. Check the OpenAI Embedding Models and API Updates page (January 2024):

We are introducing two new embedding models: a smaller, highly efficient

text-embedding-3-smallmodel, and a larger, more powerfultext-embedding-3-largemodel.

Choosing the larger model might seem like the obvious choice, but you need to consider the impact on the following factors:

- Cost: The larger model is 7x more expensive than the smaller one.

- Volume: The larger model has double the dimensions, increasing storage space and potentially impacting costs.

- Performance: Larger embeddings might no longer fit into memory, negatively affecting performance. Careful consideration is needed before choosing a model.

Applying testing criteria to define which model works better for a use case is challenging. So far, I have done this manually using basic test cases, but a more professional approach is needed—perhaps a topic for a future article.

Note: For this simple test, I chose the smaller model (text-embedding-3-small), but I am also planning to test the Ollama nomic-embed-text model in the coming days.

Metadata Consideration 🏷️

This is something you need to think about upfront to avoid redoing the embedding process. Metadata is extra information added to the embedding table, allowing you to do interesting things, like filtering during the query process. For instance, in our example below, I add the “title” as extra metadata to evaluate if the query response makes sense.

Link to the Original Document 🔗

One of the parameters of the API call is the ID. This ID helps link the document back into your data flow. Since in our example, we are using MongoDB documents, we use the internal ObjectID for a strong link.

Where to Store Embeddings: Vector Databases

Embeddings require specialized storage solutions designed to handle high-dimensional vector data efficiently. Traditional databases are not optimized for embedding vectors, necessitating the use of vector databases.

Introduction to pgvector

PGVector is a powerful open-source extension for PostgreSQL that enables native vector storage and similarity search. It allows developers to:

- Store embedding vectors directly in Postgres.

- Perform approximate nearest neighbor searches.

- Support multiple distance metrics like cosine, L2, and inner product similarity.

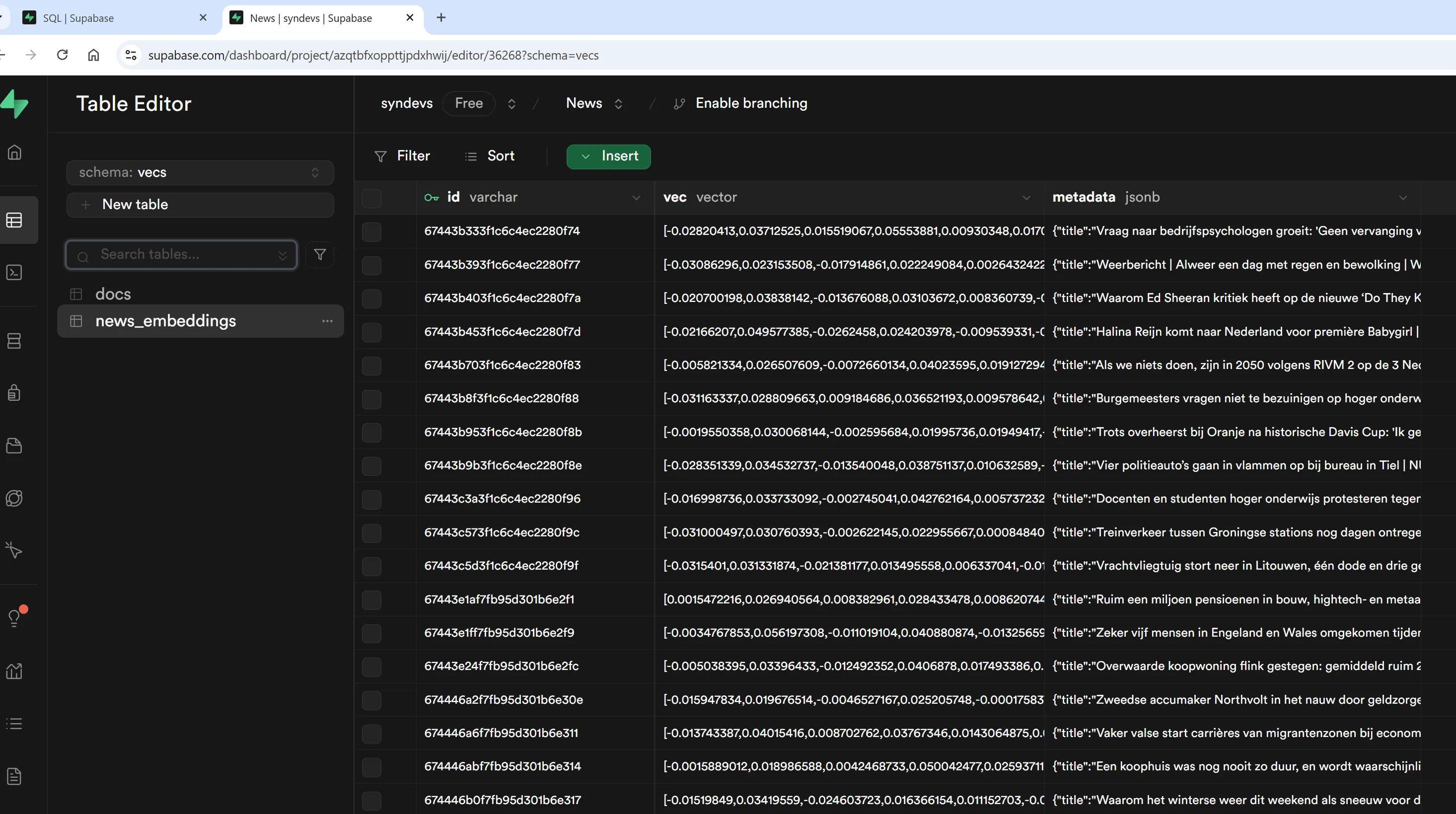

Vector Storage with Supabase and pgvector

For developers familiar with PostgreSQL, Supabase offers a solution by implementing a vector database using Postgres and pgvector. This approach combines the reliability of Postgres with modern vector storage capabilities. Supabase’s “free plan” is sufficient for initial testing and development.

Practical Example Code

Here are some code snippets to illustrate the process. It’s all quite straightforward:

- Get pending MongoDB documents not yet added to the vector.

- Call OpenAI to generate embeddings for them.

- Save the resulting vector and metadata into Supabase.

The Main Loop

# Fetch documents that have not been processed in batches

batch_size = 3000

document_batch = get_documents(batch_size)

# Process each document in the batch

for doc in tqdm(document_batch, desc="Processing Documents"):

try:

title = doc.get("title", "")

text = doc.get("text", "")

document_id = doc["_id"]

# Generate embeddings for title and text

combined_text = f"{title} | {text}"

embedding = generate_embeddings(combined_text)

# Save to Supabase

save_embeddings_to_supabase(document_id, embedding, title)

# Mark document as processed in MongoDB

collection.update_one({"_id": document_id}, {"$set": {"processed": True, "processed_date": datetime.utcnow()}})

except Exception as e:

print(f"Error processing document {doc['_id']}: {e}")

Generating Embeddings Using OpenAI

Below is a simple example to call OpenAI using their Python package to create embeddings:

# Generate embeddings using OpenAI API, handling long text by splitting into chunks

def generate_embeddings(text: str):

text_chunks = split_text_into_chunks(text, max_tokens=1000)

embeddings = []

for chunk in text_chunks:

response = openai.embeddings.create(

input=[chunk],

model=embedding_model

)

embeddings.append(response.data[0].embedding)

# Average the embeddings of all chunks to create a final representation

final_embedding = np.mean(embeddings, axis=0)

return final_embedding.tolist()

Saving to Vector Database 💾

Save embeddings to Supabase, linking them with the Mongo document ID:

def save_embeddings_to_supabase(document_id, embedding, title):

embedding_array = np.array(embedding, dtype=np.float32)

# Validate embedding before upsert

if len(embedding) == 0:

raise ValueError("Empty embedding")

# Add records to the collection

embeddings_collection.upsert(

records=[

(

str(document_id), # Linking to MongoDB document_id

embedding_array, # The vector

{"title": title} # Associated metadata

)

]

)

After that step, you should see rows in the vector table.

If the table is large, you might want to create an index: Creating an index can improve query performance, especially for large tables.

embeddings_collection.create_index()

Now the Fun Part: The Query! 🎉

Below is an example of how to perform a semantic query to find similar documents:

# Generate an embedding for your search query

embedding = generate_embeddings("Utrecht huis kopen")

embedding_array = np.array(embedding, dtype=np.float32)

try:

# The "limit" parameter specifies how many similar documents to retrieve

results = embeddings_collection.query(data=embedding_array, limit=10, include_metadata=True, include_value=True)

for result in results:

document_id = result[0]

similarity_score = result[1]

metadata = result[2]

print(f"Document ID: {document_id}, Similarity Score: {similarity_score}, Metadata: {metadata}")

except Exception as e:

print(f"Error querying for similar documents: {e}")

The result

Final Remarks

- Embeddings are quite cheap when using the small model. For example, embedding 5,000 documents cost me $0.09 (USD). Cost only becomes significant at a very large scale or when using the large variant of the model.

- For smaller projects, almost all options will work fine. Complexities arise with very large datasets, which is a topic for another article.

- Improvements in how to test models for specific use cases remain an open question that needs further exploration.

- pgvector is supported in various options, not just Supabase. For example, check Amazon’s AWS option, or run it via Docker.